- New deep learning-based algorithm tracks and labels body parts of moving species using minimal training data.

- It doesn’t require any computational body model, time data and stick-figure.

To understand the brain of any species, it’s necessary to accurately quantify their behavior. Video tracking is one of the best options to observe and record animal behavior in different configurations. It greatly simplifies the analysis and enables high-accuracy tracing of body parts.

However, extracting specific aspects of a behavior for detailed investigation could be a tedious and time-consuming process. The existing computer-based tracking uses reflective marking (body parts are highlighted with markers), where position and number of markers should be determined before recording.

Now, researchers at the Harvard University and the University of Tübingen have developed an AI tool named DeepLabCut that automatically tracks and labels body parts of moving species. This markerless pose estimation technique is based on deep learning methods that provide decent outcomes with minimal training data.

What Exactly They’ve Done?

The researchers examined the features detector architecture from a recently-developed multi-person pose estimation model, DeeperCut. They showed that a small quantity of training photos (about 200) is enough to train this neural network to achieve human-like accuracy.

It has been made possible by transfer learning, a machine learning method where a model trained on one task is applied to another related task. In this research, the feature detectors based on intensively deep neural networks were pre-trained on a giant dataset (ImageNet) to recognize objects.

Therefore, one can train these robust features detectors by labeling fewer frames (a few hundred). Once trained, it can localize a wide range of experimentally relevant body parts.

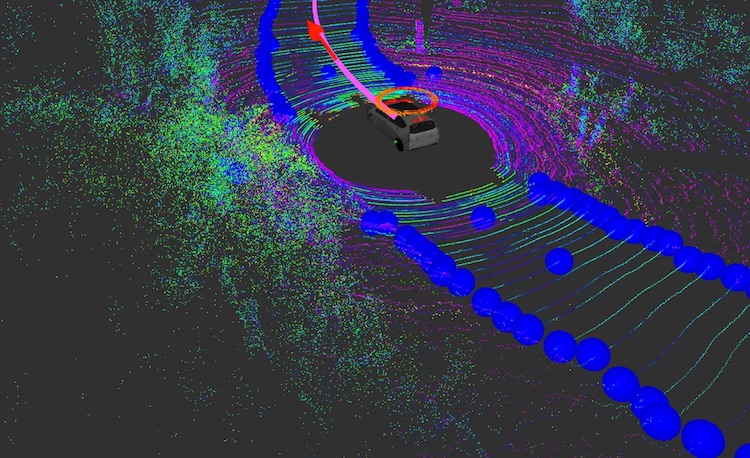

Researchers demonstrated the capability of DeepLabCut by tracking ears, snout, and tail-base during a navigation task guided by odors. They also traced several parts of a fruit fly in a 3D chamber.

The neural networks are trained on NVIDIA Titan Xp and GeForce GTX 1080 Ti GPUs with TensorFlow accelerated by CUDA deep learning framework. Using such powerful hardware, one can process 682*540 sized frames at 30 fps.

The tool is capable of providing real-time feedback based on posture estimates extracted from videography. Moreover, one can crop input frames in an adaptive way around the species to further increase the processing speed, or adapt the network architecture to boost processing times.

Reference: arXiv:1804.03142 | GitHub

Overall, DeepLabCut works in four stages:

- Extract multiple frames from video for labeling

- Using labels to generate training data

- Train neural networks as per required feature sets

- Extract these feature positions from unlabeled data.

Courtesy of researchers

Courtesy of researchers

How Is This Helpful?

The method described above doesn’t need any computational body model, time data, stick figure or complicated inference algorithm. It can be rapidly deployed to multiple behaviors posing qualitatively different challenges to computer vision.

Although researchers demonstrated DeepLabCut on Drosophila, mice, and horse, there are certainly no limitations on the method, and it can be applied to other species as well.

Read: Spam-Filtering AI Learns Behavior Of An Animal28

Tracking animals through videography can uncover new insights about their biomechanics and help us understand how their brain functions. In humans, it can improve techniques used in physical therapy and help athletes reach milestones that weren’t possible in the past.