A data center is a physical facility that private companies and governments use to store and share applications and data. Most businesses depend on the data center’s reliability and security to run their IT operations optimally.

However, not all data centers are the same: their design is based on storage and a network of computing resources that enable the delivery of shared information and applications.

The earliest data centers developed in 1940, such as ENIAC, required a great deal of power and a special environment to function properly. They were expensive and mostly used for military purposes.

The number of data centers worldwide increased sharply during the 1990s. In order to establish a presence on the internet, several organizations built large facilities called Internal Data Centers with advanced capabilities such as crossover backup.

Today’s data centers are far different: technology has shifted from conventional physical servers to virtual networks that support applications and workloads on different levels.

The current infrastructure enables applications and data to exist and be linked across multiple data centers and private/public clouds. When a website/app is hosted in the cloud, it uses data center resources from the cloud provider.

Crucial Components Of A Data Center

A data center comprises numerous key components, such as different types of servers, storage systems, switches, routers, and application delivery controllers. When installed and implemented properly, they provide computing resources, storage infrastructure, and network infrastructure that drive applications.

Basic Standards For Data Center Infrastructure

ANSI/TIA-942-A is a widely adopted standard for data center infrastructure. It complies with four tiers defined by the Uptime Institute standard:

Tier I. Basic Capacity: includes an uninterruptible power supply for power sags, spikes, and outages. It protects against disruptions caused by human error but not against unexpected outages or failures.

Tier II. Redundant Capacity: provides greater resilience against disruptions and offers maintenance opportunities. Redundant power and cooling components can be removed without shutting them down.

Tier III. Concurrently Maintainable: facilitates redundant distribution paths to serve the critical environment. Any parts can be shut down and removed without impacting IT operations.

Tier IV. Fault-Tolerant: allows any production capacity to be insulated from almost all types of failure. The system isn’t affected by disruption from planned or unplanned events. With zero single points of failure, this level of data center guarantees the highest uptime (99.995%).

To better understand this technology, we need to explore different types of data centers and their purposes. Each has its own benefits, limitations, and requirements.

Table of Contents

5. Colocation Data Center

China Unicom, one of the largest retail colocation data centers

China Unicom, one of the largest retail colocation data centers

Example: China Unicom’s Global Center data center in Hong Kong

Who uses it: Midrange to large enterprises

Also known as colo, a colocation data center is a large facility that rents rack space to businesses for their servers and other network devices. It is one of the most popular services used by organizations that may not have sufficient resources to maintain their own data center, but still want to enjoy all the benefits.

A colocation facility provides space, power, cooling, and physical security for the server. It is responsible for efficiently connecting a variety of networking equipment to different telecommunication and network service providers.

Companies with large geographic footprints can have their hardware distributed across multiple locations. For example, a single organization may have servers in five to six different colocation data centers.

There are several benefits of using a colocation data center:

- Scalable: As your company’s reach expands, you can quickly add new servers and other critical equipment to the facility.

- Location preference: You can select the data center location nearest to your customers.

- Predictable and lower costs: It costs much less to lease a colocation data center than to build your own facility. You can sign a quarterly or annual contract based on your budget.

- Reliable: Since colocation data centers are designed with high redundancy specifications, they are extremely reliable.

- Piece of mind: The technical staff takes care of tedious tasks such as managing power, installing equipment, running cables, and other technical processes. This means you don’t need to worry about server maintenance.

People often get confused between a colocation data center and a colocation server rack. They often use this term interchangeably. However, these are two different entities.

A colocation data center is a facility that rents the entire facility to another company, whereas a colocation rack is a space within a data center that multiple organizations rent.

4. Managed Data Center

Example: Fully-managed IBM Cloud Services

Who uses it: Midrange to large enterprises

This type of data center model is deployed, managed, and monitored by a 3rd-party service provider. It offers all necessary features through a managed service platform.

The data center can be either completely or partially managed. In the former, all technical details and back-end data are handled or administered by the data center provider. Whereas the latter allows businesses to have a certain level of administrative control over the data center implementation and service.

In general, the service provider maintains all network components and services, upgrades operating systems and other system-level programs, and restores data/services in the event of disruptive events.

One can source these managed services from a fixed data center, a colocation facility, or a cloud-based data center.

For example, IBM’s data center offers a wide range of managed services directly to its clients, including managed security, network, mobility, and information services.

3. Enterprise Data Center

Google data center in Eemshaven, Netherlands | Image credit: Google

Google data center in Eemshaven, Netherlands | Image credit: Google

Example: Facebook’s Forest City Data Center in North Carolina

Who uses it: Large enterprises

The enterprise data center is a private facility designed for the sole purpose of supporting a single company. It can be located on-premises or off-premises at a site, as per the customers’ convenience.

For instance, if you are running a website from Canada and your target audience is students in the United States, you will prefer to build a data center in the US to reduce page load time.

An enterprise facility is defined more by its ownership and purpose than its size and capacity. It is well-suited for organizations with unique network requirements or those that make enough revenue to take advantage of economies of scale.

The enterprise data center typically comprises multiple data centers, each designed to sustain key functions. These sub-data centers can be further classified into three groups:

- Internet data center: supports the devices and servers essential for web applications.

- Extranet: supports business-to-business transactions within the enterprise data center network. Typically, these services are accessed over private WAN links or secure VPN connections.

- Intranet: holds the application and data within the enterprise data center. This data is used for research and development, manufacturing, marketing, and other core business functions.

The major benefit of this type of data center is that it is easy for companies to track crucial parameters (such as bandwidth and power usage) and keep their software (e.g., monitoring tools) up to date. This makes it simpler to estimate upcoming needs and scale appropriately.

However, it all comes with a cost: developing enterprise data center facilities requires large capital investments, labor, equipment maintenance, and ongoing time commitments.

Read: What Is A Proxy Server and its Usage?

2. Cloud Data Center

Example: Cloud services offered by Google, IBM, Amazon (AWS), Microsoft (Azure), etc.

Who uses it: Most organizations of any size

In a cloud data center, the actual hardware is run and managed by the cloud company, often with the help of a 3rd-party managed services provider. It allows clients to run websites/applications and manage data within a virtual infrastructure running on cloud servers.

The data is fragmented and duplicated across numerous locations as soon as it is uploaded to cloud servers. In the event of any unexpected issues, the cloud provider ensures that your backups are backed up as well.

A few cloud service companies offer customized clouds, giving clients singular access to their own cloud environment, known as private clouds. The public cloud providers, on the other hand, make resources available to the public via the internet. Amazon Web Services and Microsoft Azure are among the most popular public cloud providers.

Cloud services have several advantages over on-premises data centers. With the cloud, the company pays only for the amount of hardware resources it uses. It doesn’t need to worry about the regular server updates, security, cooling costs, etc. These prices are included in the monthly subscription fee structure.

Read: 8 Different Types Of Data Breaches With Examples

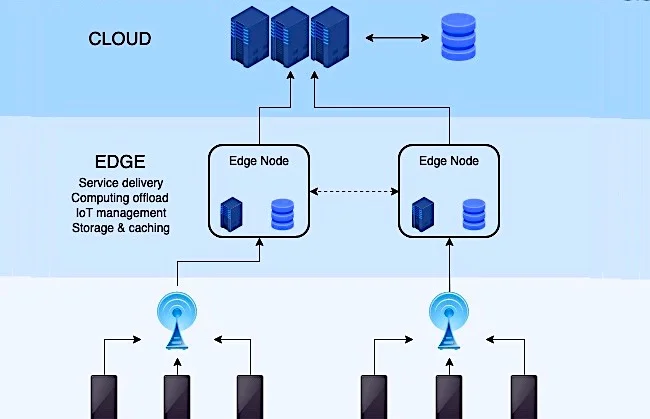

1. Edge Data Center

Still in the developing phase

Edge data centers are smaller facilities located close to the population they serve. Characterized by their size and connectivity, these data centers allow organizations to deliver content and services to local users with minimal latency.

They play a crucial role in edge computing architecture, bringing data storage and computation closer to where they are needed. Studies show that edge data centers will support IoT (Internet of Things) and autonomous vehicles by providing additional processing capacity and improving the customer experience.

While a large portion of data collected by IoT and autonomous vehicles will be processed locally, some of it will be transmitted back to a data center. Edge facilities are connected to various other data centers or a larger central data center.

Compared to enterprise data centers running at full capacity, multiple smaller edge data centers can distribute heavy traffic loads more efficiently. They can cache high-demand content, minimizing the delay between a user’s request and the server’s response. Furthermore, distributing traffic loads also increases network reliability.

For organizations trying to enhance regional network performance or penetrate a local market, these facilities are invaluable.

Read: What Is A Server? 15 Different Types of Servers and Their Uses

According to Global Market Insights, the edge data center market size is expected to exceed $50 billion by 2032, growing at a CAGR of 19%. The rising need for high computing power is pushing service providers to place data centers closer to users, ensuring lower latency and more reliable connections.

Frequently Asked Questions

What is a Software-Defined Data Center?

The Software-Defined Data Center (SDDC) is a data storage facility in which all elements of infrastructure (including CPU, storage, networking, and security) are virtualized and delivered as a service.

SDDC is a result of years of advances in server virtualization. It extends the concepts of virtualization, such as abstraction, pooling, and automation, to all resources and services to reduce costs, increase scalability, and enhance business agility.

Like conventional data centers, SDDCs can be housed on-premise, at a managed service provider, and in public, private, or hybrid clouds.

What are data center operations?

Datacenter operations is a broad term, which includes four major processes and operations:

- Installation and maintenance of network resources

- Creation, enforcement, and monitoring of policies and procedures within data centers

- Tools and techniques to ensure data center security

- Monitoring systems that take care of power and cooling

Since many businesses have started shifting to cloud computing solutions, the automation of data center operations has become more critical than ever. The goal is to optimize processes and systems for performance, cost efficiency, and scalability.

Read: 8 Different Types Of Cloud Computing [Explained]

What is a green data center?

A green data center is a data storage, management, and dissemination facility that runs on energy-efficient technologies. It is designed to maximize energy efficiency and minimize environmental impact.

The two most used parameters to measure power efficiency in data centers are:

- Power Usage Effectiveness (PUE)

- Carbon Usage Effectiveness (CUE)

Both are developed by the Green Grid, a premier trade association that works to improve IT and data center energy efficiency and eco-design.

These facilities are gaining traction across industries and have caught the eye of many companies looking to deploy green data center solutions. According to the MarketandMarkets report, the green data center market size is estimated to reach $155 billion by 2030.

Read More

Hi Varun, This is a really informative article. There are different types of data centers present, and one can choose according to organizational need which one of them to invest in. According to recent trends, cloud and edge-based data centers are the most popular and preferred by organizations today because they are efficient, secure, and meet the majority of organizational needs.