OpenAI is one of the most influential and disruptive forces in artificial intelligence, catalyzing the global AI race with its powerful language models like GPT-3, GPT-4, and the multimodal GPT-4o.

It serves more than 400 million weekly active users, with 92% of Fortune 500 companies leveraging its tools directly or through Microsoft integrations. Its valuation has skyrocketed to $300 billion, and its flagship product, ChatGPT, sees over 122 million monthly active visitors. [1][2]

As OpenAI continues to dominate headlines and adoption metrics, and with the demand for AI surging rapidly, there is ample room for competitors to develop custom, fine-tuned AI systems tailored to specific industries.

Below, I explore OpenAI’s leading competitors, highlighting their technological strengths, product offerings, and strategic approaches. Many of these companies are developing models that are fine-tuned for safety, efficiency, or open deployment, targeting niche markets that OpenAI hasn’t fully captured.

Did you know?The open-source LLM movement is growing rapidly, with Hugging Face now offering access to over 1 million AI models. This includes a vast collection of pre-trained models for tasks like natural language processing, computer vision, and speech recognition.

Table of Contents

14. AI21 Labs

Founded: 2017Headquarters: Tel Aviv, Israel

Flagship Products: Jamba, Maestro

Competitive Edge: Long-context capability, Open licensing

AI21 Labs develops full-length text generation models with strong coherence, style adaptation, and factual correctness. Its early success began with Wordtune (launched 2020), an intelligent writing assistant praised by Google.

The company further distinguished itself with the Jurassic-1 and Jurassic-2 model series (2021 and 2023), known for their linguistic breadth and performance.

In 2024, AI21 introduced Jamba, a hybrid model series combining Mamba SSM and Transformer architectures, offering ultra-long context windows of up to 256,000 tokens. The models were open-sourced under the Apache 2.0 license.

In 2025, the company unveiled Maestro, an orchestration system designed to reduce hallucinations by ~50% and boost reasoning accuracy above 95%, addressing enterprise-grade reliability. [3]

13. LightOn

Founded: 2016Total Funding: $15.8 million+

Flagship Product: Paradigm

Competitive Edge: Sovereign AI focus, French and multilingual specialization

LightOn is a Paris-based artificial intelligence and deep tech company that specializes in large language models (LLMs) and privacy-preserving enterprise AI solutions.

Initially focused on photonic computing solutions, such as its Optical Processing Unit, LightOn pivoted to enterprise generative AI by 2020. The company trained and released 12 large language models before launching its flagship on-premises platform, Paradigm, in 2021.

While the company doesn’t yet operate at the massive scale of GPT-4, its models are optimized for cost-efficient deployment, language alignment with European dialects, and enterprise customization. Its client base includes financial institutions, public-sector organizations, and research institutions.

In late 2024, LightOn became Europe’s first publicly listed GenAI startup, debuting on Euronext Growth with a valuation of around €60 million. It has received funding from the French government, BPIFrance, and leading European deep tech investors. [4]

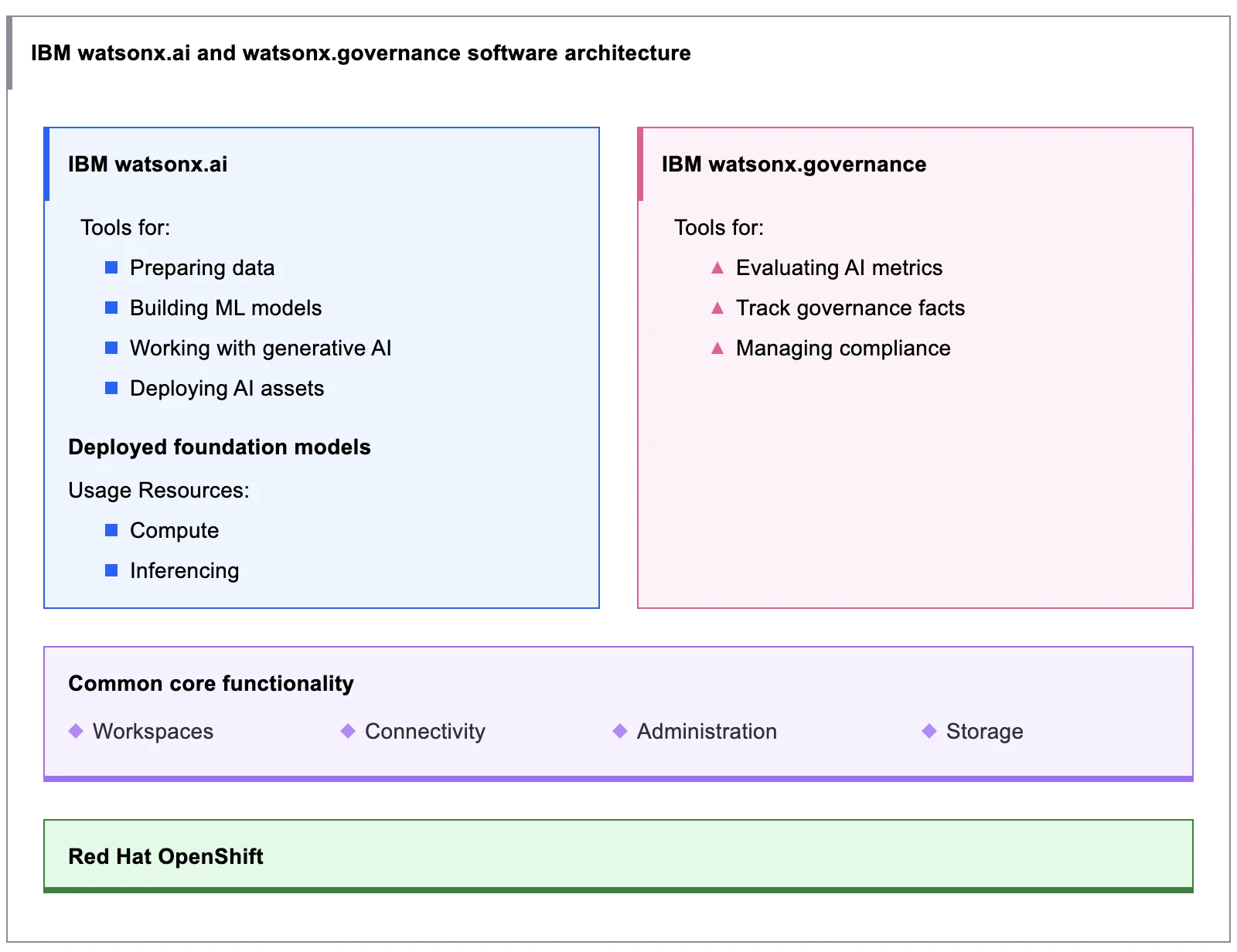

12. IBM Watsonx

Written in: Python

Core Modules: watsonx.ai, watsonx.data, watsonx.governance

Competitive Edge: Trusted enterprise focus, Hybrid-cloud mastery

IBM Watson began in the 1950s as part of IBM Research and gained global attention in 2011 when its DeepQA system defeated human champions on the US quiz show Jeopardy!

Since then, Watson has evolved into an enterprise-grade AI platform encompassing NLP, ML, hybrid cloud deployment, and generative AI through its rebranded Watsonx.

Today, Watsonx supports multiple LLMs, including IBM’s own Granite models and third-party options like LLaMA-2 and Mistral. It delivers seamless deployment across public, private, and edge, using platforms like Red Hat OpenShift and LinuxONE hardware for compliance and performance.

In 2025, IBM spotlighted two strategic themes: agentic AI and hybrid cloud. They showcased new capabilities in Watsonx Orchestrate (AI agent builder with over 150 agents), Watsonx.data (enhanced RAG and semantic search), and lightweight Granite 4.0 Tiny models suitable for consumer GPUs. [5]

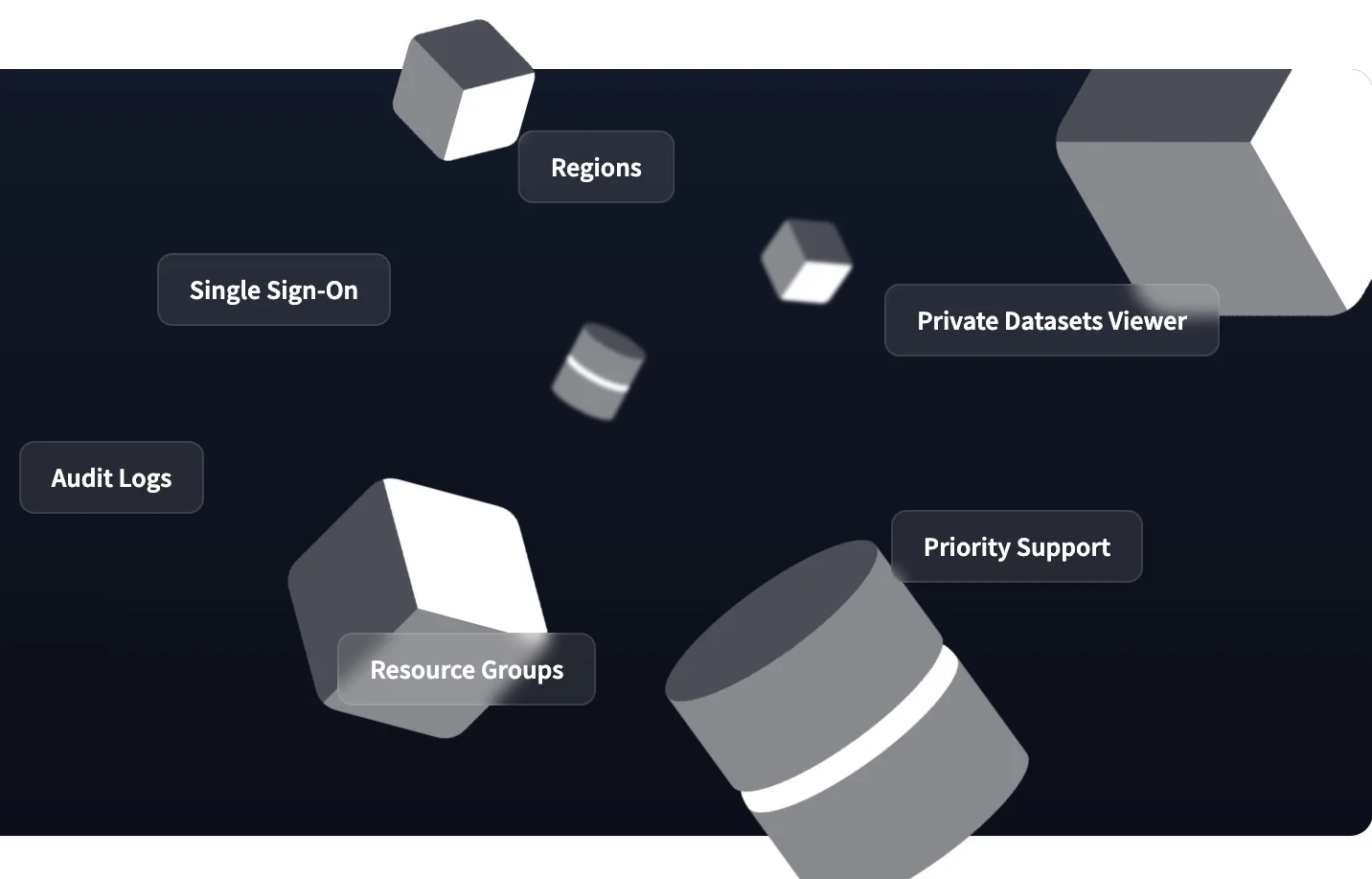

11. Hugging Face

Employees: 250+

Flagship Products: Transformers library

Competitive Edge: Interoperability, Centralized open-source ecosystem

Hugging Face is widely regarded as the “GitHub of machine learning.” It has become the central hub for open-source AI development and collaboration.

In 2018, it released its Transformers library. This library now supports models from all major players, including OpenAI, Meta, Cohere, Google, Mistral, and Anthropic. It has become the industry standard for working with large language models (LLMs) in Python.

The platform has expanded into computer vision, audio, multimodal learning, reinforcement learning, and generative AI, maintaining an unwavering commitment to open science and transparency.

Today, Hugging Face hosts over 1.5 million models, 340,000 datasets, and 50,000 demo spaces.

10. DeepSeek

Headquarters: Zhejiang, China

Flagship Models: DeepSeek‑V3, R1

Competitive Edge: Open source, Cost-efficiency at scale

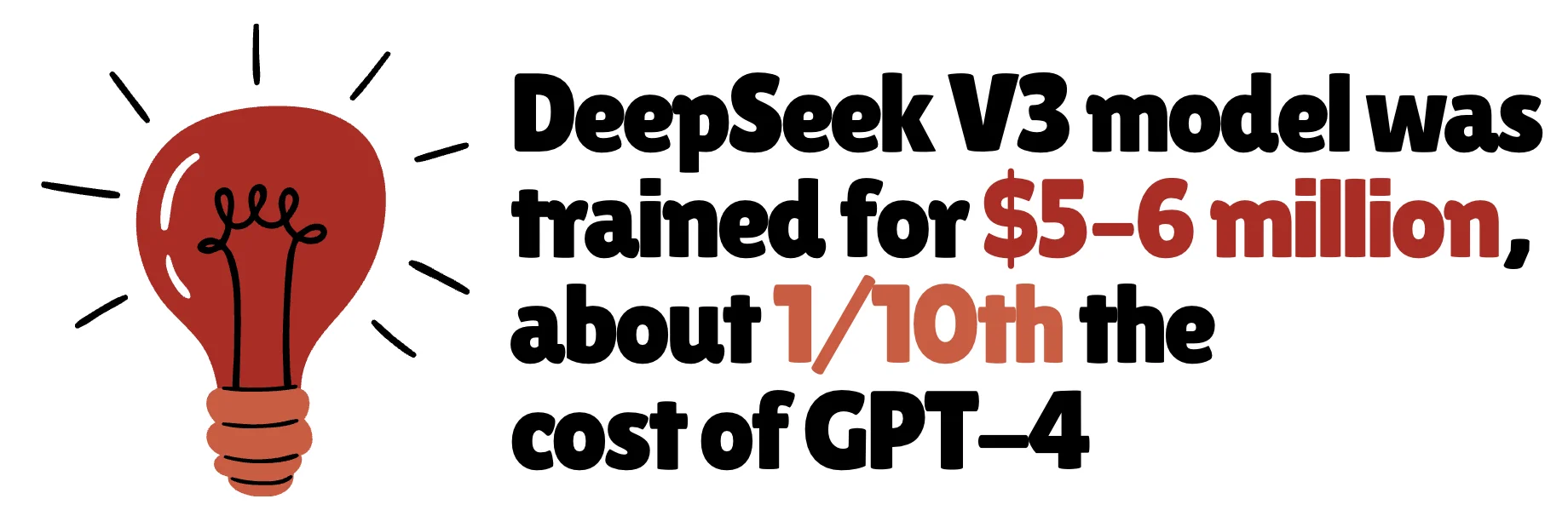

DeepSeek is a Chinese AI startup that rapidly gained global attention with its open-weight models, offering performance on par with US tech giants at a fraction of the cost.

Its flagship DeepSeek‑V3 model features an astounding 671 billion parameters (37 billion active per token) and was trained on 14.8 trillion tokens using just ~2,000 NVIDIA H800 GPUs at a reported cost of $5.58 million, compared to over $100 million and 16,000 GPUs for comparable Western models. [6]

In 2025, DeepSeek introduced DeepSeek‑R1, a reasoning-optimized model that rivaled OpenAI’s o1 models in benchmarks for math, coding, and logical reasoning. Released under the MIT license, the fully open-source model has driven rapid adoption across the AI community. [7]

Despite its technical acclaim, DeepSeek faces increasing global scrutiny over data privacy. Several governments (including Germany, South Korea, Australia, Taiwan, and Italy) have banned its use by public institutions, citing concerns over user data being stored in China.

However, some European AI startups like Synthesia, TextCortex, and ElevenLabs have begun integrating R1 for cost-sensitive tasks.

9. Aleph Alpha

Founded: 2019Total Funding: $533 million

Flagship Products: Luminous, PhariaAI

Competitive Edge: European data sovereignty, Citation-backed model responses

Aleph Alpha is a German AI research and technology company that aims to deliver European “sovereign AI” with strong data privacy and transparency — countering the dominance of US-based tech giants.

Its flagship Luminous model family — starting with Luminous Base and advancing to Luminous World — is a multilingual, multimodal system capable of processing both text and images. These models have been benchmarked favorably against models like GPT-3, with strong performance in legal reasoning, medical comprehension, and multilingual tasks, particularly for German and other EU languages.

The company has also developed PhariaAI, an “AI operating system” for compliance- and sovereignty-sensitive clients, focusing on deployment flexibility across secure cloud or on-prem environments. It features a tokenizer-free (T-Free) architecture, specifically tailored for German enterprise use, and outperforms models up to ten times larger on localized tasks.

8. Mistral AI

Valuation: $6.2 billion+

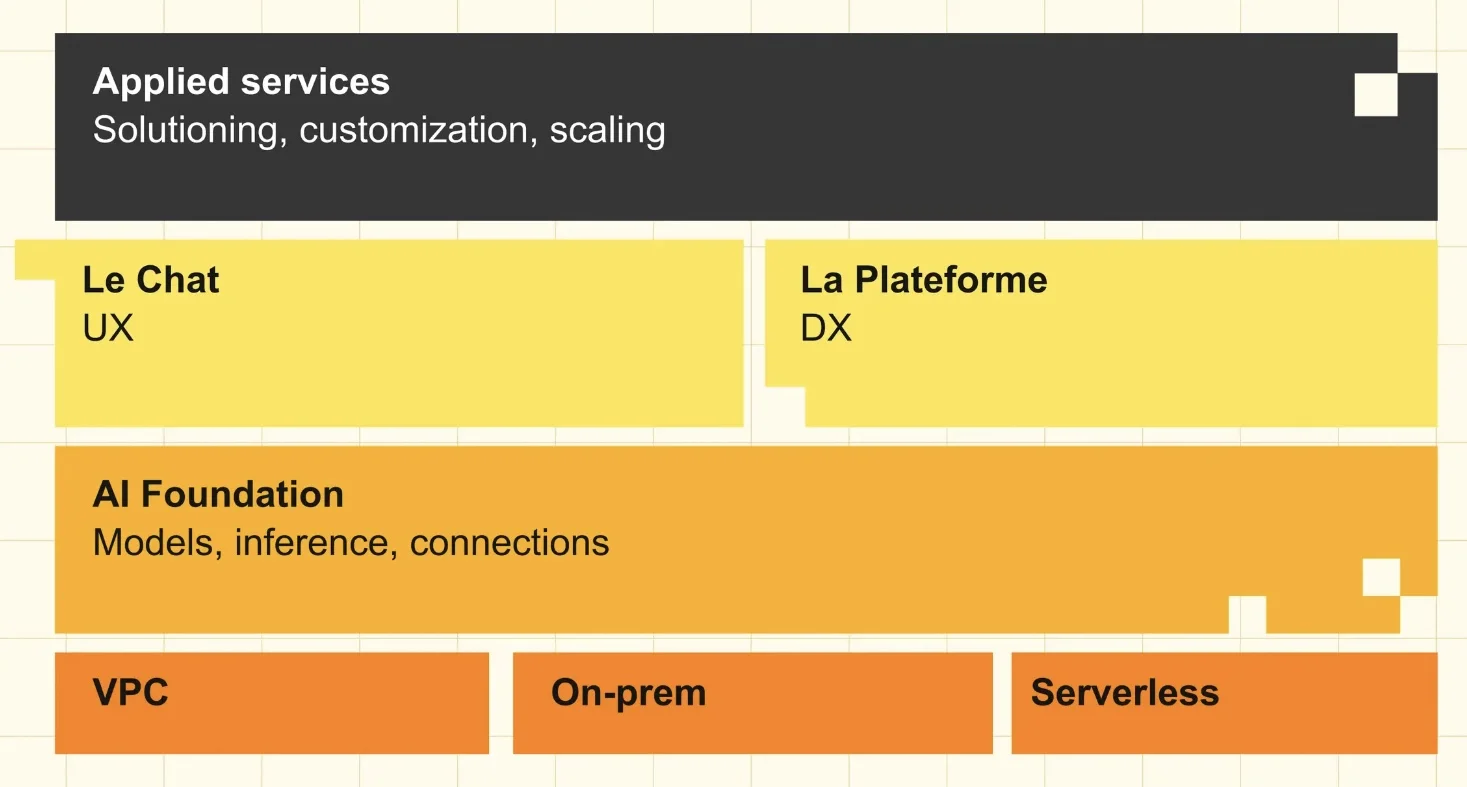

Flagship Products: Le Chat, Mistral Compute (cloud infrastructure)

Competitive Edge: Open-weight leadership, EU-backed trust and compliance

Mistral AI is a fast-growing European artificial intelligence startup based in Paris, France. It gained early recognition by releasing Mistral 7B, a dense decoder-only model with 7 billion parameters, which performed competitively against larger proprietary models.

Following that, the company introduced Mixtral, a mixture-of-experts (MoE) model with 12.9 billion active parameters, designed to deliver strong performance while reducing inference costs. [8]

Mistral’s commitment to open-weight releases (downloadable and modifiable models) has made it a darling of the open-source community and enterprise developers looking for privacy and control.

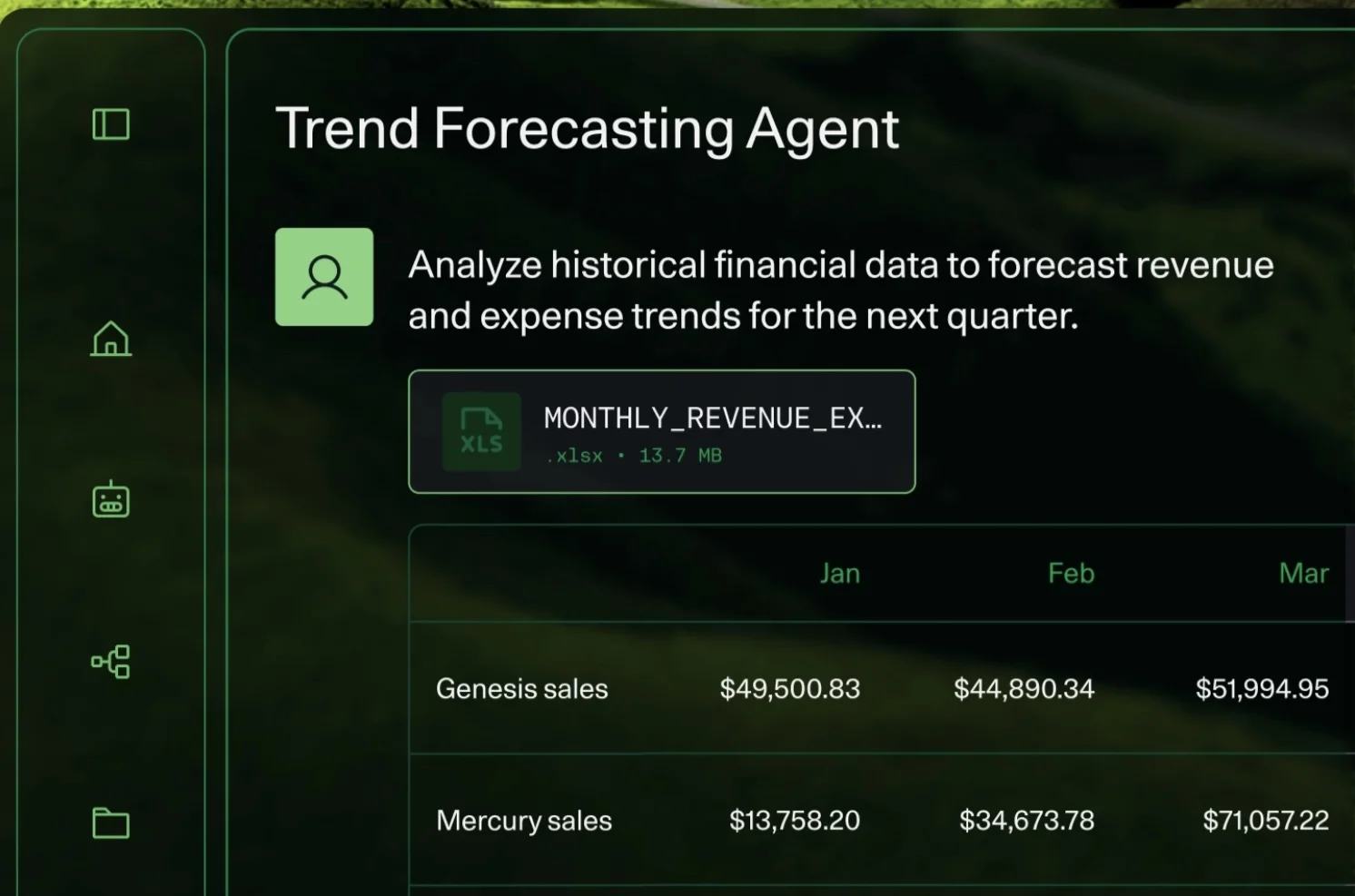

In 2025, the company launched its chatbot “Le Chat” along with an enterprise version, “Le Chat Enterprise,” designed to provide customizable AI assistants with a focus on European languages and regulatory compliance.

They also launched Mistral Compute, an end-to-end infrastructure stack with data centers near Paris with an initial capacity of 40 MW and 18,000 GPUs, scalable to 100 MW. The initiative is supported by the EU and powered by NVIDIA technology.

7. AWS AI

Parent: Amazon

Flagship Products: Amazon Titan, Bedrock, SageMaker

Competitive Edge: Cloud leadership, Model choice via Bedrock

AWS does not offer its own general-purpose chatbot like ChatGPT, but it provides access to multiple state-of-the-art models (including Claude and LLaMA) through Amazon Bedrock and offers the tooling to build custom assistants or copilots on top of them.

Unlike OpenAI, which prioritizes vertical integration (model + UX), Amazon empowers horizontal extensibility (bring your own model, train your own, or fine-tune existing ones). OpenAI is ideal for companies that want plug-and-play AI, while Amazon appeals to those that want ownership, privacy, and full-stack control.

AWS AI Services offers a comprehensive stack, ranging from pre-trained APIs (for vision, speech, and text) to customizable models through SageMaker. It also provides access to foundational models via Bedrock, enabling enterprises to build generative AI applications without needing to manage the underlying infrastructure.

In 2024, Amazon invested $4 billion in Anthropic, gaining access to Claude models and cementing AWS as a leading hub for foundation model hosting and development.

That same year, Amazon revealed its Nova family of foundation models (Micro, Lite, Pro, Premier, Canvas, Reel, Sonic), integrated with custom Trainium and Inferentia chips for optimized performance and cost-efficiency.

These models support text, image, video, and speech input and output, enabling robust multimodal capabilities across a wide range of use cases.

Amazon’s infrastructure, powered by Trainium 2 chips, offers a cost advantage — these chips are 30 to 50 percent less expensive than comparable NVIDIA GPU setups, helping reduce the overall cost of model training and inference for customers. [9]

6. Azure AI

Established: 2015Parent: Microsoft

Flagship Products: Azure ML, Microsoft Copilot

Competitive Edge: Exclusive Partnership with OpenAI

Microsoft has significantly strengthened its position in AI by investing nearly $14 billion in OpenAI and establishing Azure as the primary cloud platform for hosting advanced large language models (LLMs). [10]

While OpenAI focuses on model creation and product interfaces (ChatGPT and DALL·E), Microsoft Azure AI focuses on enterprise-grade hosting, integration, and deployment of those models. Azure provides the cloud backbone and enterprise delivery that brings OpenAI models to the world.

Azure AI supports a full spectrum of services: from machine learning model training, fine-tuning, data labeling, and deployment, to AI-powered APIs for vision, speech, translation, and document intelligence.

Plus, it hosts some of the largest AI supercomputers, built in collaboration with OpenAI and NVIDIA, delivering exa-scale computing power to its customers. Azure now supports over 85% of Fortune 500 companies, providing enterprise-grade scalability and security. [11]

With the rise of generative AI, Microsoft has introduced a suite of Copilot products across platforms like Word, Excel, Outlook, and GitHub. These Copilots are reshaping productivity by integrating natural language generation directly into everyday business tools.

5. Cohere

Revenue: $100 million+

Flagship Products: Command R/R+, Command A

Competitive Edge: Superior retrieval-augmented capabilities, Enterprise-first security

Cohere is a Canadian AI company that focuses exclusively on enterprise-grade natural language models. It emphasizes secure, private, and cloud-agnostic deployments, not flashy consumer chatbots.

At the heart of Cohere’s offerings are its Command R+ models, trained to handle retrieval-augmented generation (RAG), long-form reasoning, summarization, and business-critical use cases like customer support, internal search, and document processing.

The company is also popular for its best-in-class embedding models, which consistently top benchmarks like MTEB (Massive Text Embedding Benchmark). These embeddings are widely used in search engines, recommendation systems, and chatbots that require memory, grounding, and context.

In 2025, Cohere released the Command A model that competes neck-and-neck with GPT‑4o and DeepSeek‑V3 on accuracy. According to the company, Command A is up to 2.4 times faster than DeepSeek‑V3 and 1.75 times faster than GPT‑4o.

In May 2025, Cohere’s annual revenue reached $100 million, up from $35 million in 2023. This growth is largely driven by long-term enterprise contracts (accounting for approximately 85% of its business) with profit margins nearing 80%, particularly in sectors like finance, healthcare, and government. [12]

4. xAI

Founded: 2023xAI partners with @Polymarket to blend market predictions with X data and Grok’s analysis. Hardcore truth engine – see what shapes the world.

This is just the start of our partnership with @Polymarket. More to come. 🚀 pic.twitter.com/gPPvJlVthb

— xAI (@xai) June 10, 2025

Valuation: $120 billion+

Flagship Product: Grok

Competitive Edge: Integrated real-time data, Politically neutral/unfiltered

Founded by Elon Musk, xAI is designed as a public-benefit corporation to build AI that is “truth-seeking” and competitively positioned against OpenAI and others.

Its flagship product is Grok, a conversational AI assistant inspired by the characteristically rebellious tone of X (formerly Twitter). Designed to be witty, uncensored, and politically independent, Grok stands in contrast to the more safety-optimized and cautious language models developed by other companies.

In early 2025, the company released Grok-3. It was trained using 200,000 GPUs on the Colossus supercomputer and is reported to outperform GPT‑4o on benchmarks like AIME and GPQA.

It integrates real-time access to data from X, offering users a more “in-the-moment” conversational experience. This tight integration with a major social platform gives xAI an unprecedented stream of public discourse data, which helps Grok stay relevant, up-to-date, and responsive to current events.

In June 2025, xAI secured $5 billion in debt and an additional $5 billion in equity to expand its AI infrastructure, further develop its Grok chatbot, and compete with rivals like OpenAI and Anthropic. [13]

3. Meta AI

Model downloads: 1 billion+

Flagship Products: LLaMA 2, 3, and 4

Competitive Edge: Open‑source leadership, Scalable model design

Meta AI, formerly known as Facebook AI Research, has made a significant mark on the global AI landscape by committing to open-source development, making its research and large language models (LLMs) publicly accessible.

In 2023, Meta released LLaMA 2 (Large Language Model Meta AI), an open-weight model family trained with billions of tokens and optimized for commercial use. In 2024, they followed up with LLaMA 3, which features improved reasoning, multilingual support, and enhanced efficiency for real-world deployment.

Unlike many competitors that lock models behind APIs or proprietary platforms, Meta AI allows researchers, developers, and businesses to fine-tune and deploy LLMs on their own infrastructure.

Meta also integrates these AI models deeply into its products: generative AI is now behind Instagram’s AI stickers, WhatsApp’s assistant bots, Facebook’s AI avatars, and customized chat experiences in Messenger and Threads.

In April 2025, Meta released Llama 4 models (Scout and Maverick) built using a ‘mixture-of-experts’ architecture, capable of processing context windows of up to 10 million tokens. Alongside these, Meta introduced the ‘Behemoth’ model, featuring 288 billion active parameters and nearly two trillion total parameters.

Looking ahead, the company projects it could generate up to $1.4 trillion from generative AI by 2035. [14]

2. Anthropic

Founded: 2021Valuation: $61.5 billion

Flagship Products: Claude 3 and 4

Competitive Edge: Safety-first architecture

Established by former OpenAI executives and researchers, including Dario Amodei, Anthropic was created as a response to growing concerns about the risks and opaqueness of large language models. Its mission centers on “Constitutional AI,” a safety-first design framing AI behavior around ethical rules.

Anthropic’s flagship family of AI models is known as Claude, named after Claude Shannon, the father of information theory. These are large language models that support reasoning, summarization, and natural conversation with human-like fluency.

Claude 3, in particular, made headlines for outperforming GPT-4 in several benchmarks, especially in domains requiring long-context understanding, multi-step reasoning, and factual reliability. [15]

In 2025, the company released Claude 4 Opus and Sonnet, focusing on coding, tool integrations, safety, and price competitiveness. These models are embedded in Amazon Bedrock, Slack, Notion, Quora’s Poe, and other third-party tools, offering broad enterprise reach despite lacking a direct consumer-facing product like ChatGPT.

In March 2025, Anthropic raised $3.5 billion in a Series E round, bringing its total funding to $14.3 billion and its valuation to $61.5 billion.

1. Deepmind

Parent: Alphabet

Flagship Products: Gemini LLMs, AlphaFold3

Competitive Edge: Depth of scientific research and AI safety commitment

DeepMind is a central pillar in Alphabet’s long-term AI strategy. One of its most widely acclaimed accomplishments is AlphaGo, the first AI to defeat a world champion in the game of Go.

Following that, DeepMind created AlphaZero, an algorithm that taught itself to master Go, Chess, and Shogi purely through self-play (without human data).

Later, the company revolutionized biology with AlphaFold, an AI system that predicts 3D protein structures with near-experimental accuracy. By 2023, AlphaFold had predicted the structure of over 200 million proteins, accelerating global pharmaceutical and biomedical research.

DeepMind has since transitioned into building advanced generative models. The Gemini 2.5 (launched in 2025) offered context lengths exceeding 1 million tokens, outperforming competitors on long-context reasoning and memory tasks.

Today, DeepMind excels in multi-domain problem-solving, including:

- Reinforcement Learning (RL) at scale (e.g., AlphaGo, AlphaZero)

- Life sciences (AlphaFold has reshaped computational biology)

- High-context LLMs (Gemini series with longer memory)

- Neuroscience-inspired AI architectures (e.g., neural memory networks)

Many of its models and breakthroughs are embedded across Google’s consumer products, including Search, Gemini, Gmail, and Android. Its models serve billions of users indirectly through these platforms, even if DeepMind itself does not offer a consumer-facing chatbot like ChatGPT.

In 2025, DeepMind introduced a new AI model specifically designed for robotics, capable of running locally on devices without requiring internet connectivity. This model allows bi-arm robots to perform complex tasks by using voice, language, and action processing. [16]

Read More

Sources Cited and Additional References- Technology, OpenAI’s weekly active users surpass 400 million, Reuters

- Hayden Field, OpenAI closes $40 billion funding round, CNBC

- Ben Bergman, Nvidia-backed Israeli AI startup AI21 is raising a $300 million funding round, Business Insider

- Market, LightOn to become Europe’s first listed GenAI startup with Paris IPO, Reuters

- IBM Think 2025, Watsonx Platform fuels agentic AI and hybrid cloud value, Futurism Group

- Computation and Language, DeepSeek-V3 technical report, arXiv

- DeepSeek-R1, Incentivizing reasoning capability in LLMs via reinforcement learning, arXiv

- Machine Learning, Mixtral of Experts, arXiv

- Ayesha Javed, AWS CEO Matt Garman is playing the long game on AI, Time

- Ananya Gairola, Microsoft invested nearly $14 billion in OpenAI, Yahoo Finance

- Alysa Taylor, How real-world businesses are transforming with AI, Microsoft

- Echo Wang, AI firm Cohere doubles annualized revenue to $100 million on enterprise focus, Reuters

- Arjun Kharpal, Elon Musk’s xAI raises $10 billion, CNBC

- Kyle Wiggers, Meta forecasted it would make $1.4 trillion from generative AI by 2035, TechCrunch

- Technology, Claude 3 outperforms GPT-4 across multiple parameters, Indian Express

- Carolina Parada, Gemini robotics on-device brings AI to local robotic devices, DeepMind